For a bionic limb to be truly “mind-controlled”, it should respond to the user’s thoughts the same way that natural limbs do. Everyone agrees that this is desirable. But the technologies required to achieve this goal remain uncertain, especially on a mass scale.

What are Mind-Controlled Bionic Limbs?

By the simplest definition, every prosthesis is already a mind-controlled device. No pirate’s hook ever did anything of its own accord.

But this is not what most people mean when they talk about mind-controlled bionic limbs. What they mean is controlling these devices purely with thought, intuitively, the same way that we control our natural limbs.

Such a solution certainly represents the ultimate prosthesis, but the science of how to achieve this is not yet settled.

Let’s take a look at bionic hands as one example. To achieve true mind control, the user must have not only precise control of the wrist, hand, and each of the hand’s digits. He must also have advanced sensory feedback so that he can make the same kind of intuitive adjustments that people do with their natural hands.

How do we build such a device? For control, we can surgically embed myoelectric sensors to get a clean read of muscle movements and then use advanced pattern recognition software to translate these movements into commands for the bionic hand.

For sensory feedback, we can attach sensors to the bionic hand and then surgically embed electrodes to directly interface with sensory nerves in the residual limb (i.e. a neural interface). When the sensors on the bionic hand detect sensations, a control system can cause the embedded electrodes to electrically stimulate the nerves so that the brain “feels” roughly what the sensors feel.

These technologies already exist. In fact, they existed back in 2015, as you can see from this video:

So what’s the problem?

This is one patient being treated by a team of leading experts financed by a research budget. Even so, the complexities are daunting. For example, getting embedded electrodes to precisely stimulate sensory nerves is tricky. Not everyone’s nerves are the same. One little “misalignment”, for lack of a better term, and the user will experience sensations in his pinky when the bionic hand is in fact receiving feedback from its thumb.

Also, scar tissue can form around embedded electrodes over time, interfering with their ability to properly stimulate the nerves.

It takes true expertise and a lot of money to deal with these issues, so unless we can clone our experts and substantially reduce the costs, this solution is not currently scalable.

Why are we pointing this out? Because there are numerous options either currently available or pending that offer some level of mind control over bionic limbs. Each has its own cost/benefit profile.

Our goal with this article is to help patients understand these options. To do this, we’re going to walk through the available technologies and the implications of each. We’re also going to split this conversation in two, one part for upper limbs, and the other for lower limbs, as the bionic devices for each currently operate in entirely different paradigms.

Upper-Limb Bionic Technologies

Direct Control Myoelectric Systems Using Skin Sensors

Direct control myoelectric systems represent the most rudimentary form of mind control.

These systems consist of skin-surface sensors that detect muscle movements in the residual limb. Typically, two muscle sites are monitored. Flexing one muscle equates to one signal, the other muscle to a second signal. Tensing both muscles simultaneously represents a third signal. Two sequential contractions of the same muscle can represent a fourth signal, and so on.

Source: https://link.springer.com/article/10.1007/s40137-013-0044-8/figures/2

These signals are then mapped to specific actions in the bionic limb.

This type of solution is currently used only for upper-limb bionics. The following video, from 3:03 to 4:44, provides a good description of the actions necessary to control a bionic hand using this approach:

As you can see, this isn’t exactly intuitive. In some respects, it is more like joystick control than mind control. Yet, this is the type of system that is used in most bionic hands today.

For more information on direct myoelectric control, see Finding the Right Myoelectric Control System.

Aside from a lack of intuitive control, one of the biggest problems with direct control myoelectric systems is that the quality of the signals detected by the skin sensors can degrade significantly depending on arm position, whether the residual limb swells or shrinks throughout the day, and the presence of sweat. For this reason, scientists began to develop more sophisticated systems.

Myoelectric Pattern Recognition Using Skin Sensors

What is myoelectric pattern recognition? Basically, you increase the number of sensor sites from 2 to as many as 16, meaning you collect a lot more data on muscle movements. When the user attempts an action such as picking up a pen from a desk, the resulting pattern of muscle movements is recognized by the PR system and translated into one or more commands for the bionic hand, such as, “switch to a pinch grip and close the relevant digits”.

The advantage of this type of system is that the user can make more intuitive movements and let the software figure out what those movements mean.

We have an entire article on this subject here. Or you can get a quick overview by watching this one-minute video on a pattern recognition system from Ottobock called Myo Plus:

The disadvantage of this system is that it still uses skin-surface sensors, which can again be negatively impacted by residual arm position, swelling, and sweating.

As a consequence, even though myoelectric pattern recognition systems can theoretically decipher scores of muscle movement patterns, most such systems limit themselves to just a handful of patterns to avoid mistakes, making them far less intuitive than they could be.

To counter this, scientists decided to eliminate the problems with the skin-surface sensors by surgically implanting sensors directly into the relevant muscles.

Myoelectric Pattern Recognition Using Surgically Implanted Sensors

The concept of embedding sensors directly in the muscles was simple but its implementation was not. Suitable sensors needed to be designed. They needed to be powered wirelessly and to transmit their data the same way. And a new control system had to be developed to process data received simultaneously from many more sensors and at different depths in the muscle tissue.

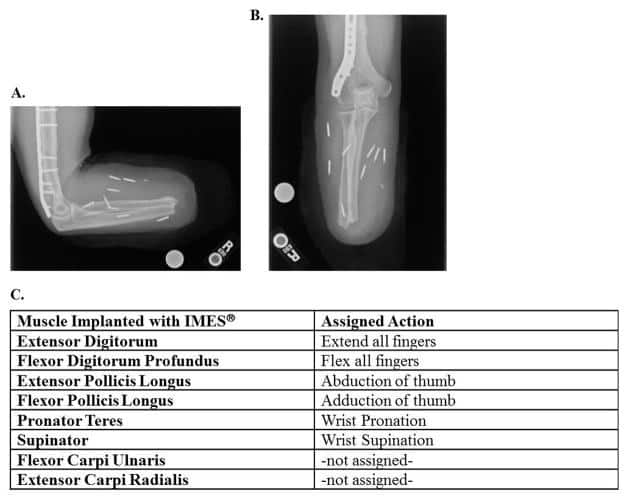

However, that’s ancient history now. We’re already there, as you can see from this x-ray image using a system called IMES (Implantable Myoelectric Sensor System):

If myoelectric signals can be detected in a cleaner and more reliable manner, then fed into a good pattern recognition system, will that give us true mind-controlled bionic limbs?

Not exactly. But before we explain why, let’s talk about what appears to have been a wrong turn.

The Aborted (or Paused) Attempt to Create a Neural Interface for Control

To move a natural hand, the brain sends signals to the motor nerves, which in turn cause muscles to contract and move the joints. When muscles move, they generate an electrical signal that is large enough to be detected by sensors placed against the skin above those muscles.

From this perspective, myoelectric control systems are actually detecting a secondary, amplified signal.

Early in the quest to achieve mind-control over bionic limbs, it was thought that it might be better to surgically embed sensors to directly detect and interpret the signals received by the motor nerves. However, these signals are much weaker and therefore harder to detect than myoelectric signals. Also, the same scar tissue problems described earlier can eventually degrade the sensors’ ability to detect those signals. Finally, the motor nerves are in the same nerve bundles as the sensory nerves, and trying to implant both sensors for control purposes and electrodes to stimulate sensory feedback sometimes led to interference between those two functions.

In the end, it seems that implanting myoelectric sensors in muscle tissue won this particular battle, as they are now the technology of choice in most projects focused on user control. However, this does not mean they are without their flaws.

The Dexterity Problem

If you look at the preceding image of the IMES implanted sensors, you will notice that there are no assigned actions for individual fingers. All the fingers must either flex or extend in unison. The only individual digit action supported is thumb abduction (i.e. moving the thumb away from the palm).

This is a problem because many tasks require that we use individual fingers or combinations of our fingers.

In fairness, more advanced bionic hands and pattern recognition systems are flirting with this finger-level dexterity. For example, here is a video of a young woman using an early prototype of the BrainRobotics 8-Channel Hand to play the piano, which obviously can’t be done without finger-level dexterity:

However, none of the systems demonstrating this level of dexterity have successfully made it to market. They all remain in the prototype stage, which suggests that we’re not quite there yet.

The Scalability Problem

Let’s give our scientists and engineers a vote of confidence and say that they will soon overcome any remaining control limitations of a myoelectric system using embedded sensors and pattern recognition software.

How will we spread this solution to the millions or even tens of millions of people around the world who need it?

Clearly, performing implant surgeries on all those people is not feasible.

One solution might be to start implanting myoelectric sensors in muscle tissue during amputation, essentially prepping the residual limb ahead of time for potential interaction with a myoelectric control system. But even if this could be implemented, what about the many millions of people who have already undergone an amputation?

There are three possible solutions to this problem:

- Injectable sensors. There are already experiments for this underway, including with the IMES system, and being able to implant sensors with a needle is much more scalable than traditional surgery. But we’ll simply have to wait to see if these experiments succeed.

- An improved skin-surface sensor system that can detect all the necessary muscle movements without fail. The preferred solution here seems to be some kind of auto-adjusting socket with scores or even hundreds of sensors in it, essentially turning it into a giant sensor grid that always generates sufficient data for pattern recognition to discern user intent. Alternatively, it may be possible to weave the sensors into a flexible, stretchable socket liner. Either of these solutions is considerably more scalable than embedding sensors even if we can inject them.

- A non-myoelectric solution. Unlimited Tomorrow’s TrueLimb doesn’t use myoelectric sensors. Instead, it uses a sensor grid of up to 36 sensors embedded in the socket to detect changes in muscle topography. This approach overcomes many of the drawbacks of skin surface myoelectric systems and is just as scalable. Even better, it’s already on the market. But we don’t know yet if it holds the promise of controlling individual digits (we’re still investigating).

So, if we solve the dexterity and scalability problems, does this complete our quest for mind-controlled bionic limbs? Not even close. For one, we still have the incompleteness problem.

The Incompleteness Problem

Achieving true mind control over bionic limbs is not just a control problem. For example, even if we establish 100 % reliable control over all possible hand movements, this is not a complete system.

One way that it is not complete is that the user still has no intuitive awareness of the hand’s position.

When moving a natural limb, the brain keeps track of the limb’s position based on the position of the muscle pairs that control that movement. To curl your arm, as one example, you must contract your bicep muscle. This, in turn, stretches your triceps. It is this muscle pair contraction/extension that allows your brain to accurately track the position of your limbs even if your eyes are closed, including the smallest movements of your fingers and toes.

Absent this awareness, you have to use your eyes to guide every movement of your limb (after all, if you don’t know where your limb is, you have no idea how you should move it next).

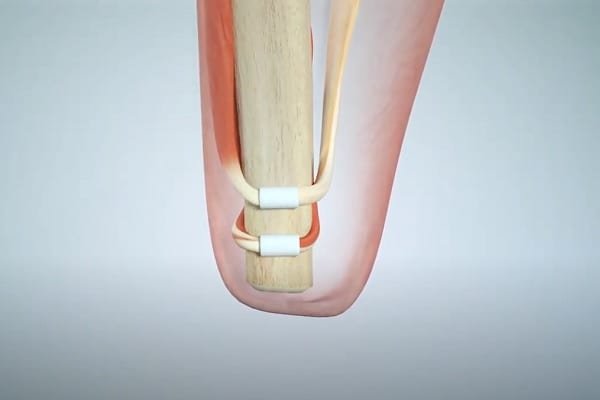

During amputation, these natural muscle pairings are often severed. Fortunately, there is a new surgical procedure called the Agonist-antagonist Myoneural Interface (AMI) that addresses this issue by re-establishing the pairing:

This restores the brain’s natural sense of position and movement (otherwise known as “proprioception”) for the missing portion of the limb.

The control system for a bionic joint is then calibrated so that its movements correspond to the movements of the restored muscle pairs, and, voila, the brain can better control the bionic limb because it can sense its position in space.

All of that is a gross over-simplification but you can get the full story by reading our complete article on AMI.

However, even if we implement this for all future amputations, we still have other issues. The biggest one is sensory feedback. To effectively use a bionic hand, the user needs sensory feedback — at the very least, feedback on contact and pressure.

Sensory Feedback for Upper-Limb Bionics

Imagine having no feeling in your hand, i.e. no ability to feel any sensations, even though the hand works perfectly in every other way.

How would you use it? Again, you would have to guide every movement with your eyes.

It turns out that proprioception, sensory feedback, and dexterous control must all work together to provide true mind control over bionic limbs. You can’t achieve it without all three.

So where are we on sensory feedback? As you saw from the earlier video (the guy using his bionic hand to work with fruit), we’re quite far along, but most of the historical progress has involved the use of a neural interface (i.e surgically implanting electrodes to directly stimulate sensory nerves with feedback provided by the sensors on bionic fingers).

What’s wrong with that? Surgery — especially highly skilled surgery — isn’t exactly scalable, so scientists have been exploring other options, including:

- Targeted Sensory Reinnervation. The idea here is that the skin over a target muscle is denervated, then reinnervated with nerve fibers from an amputated limb. When this piece of skin is touched, it provides the amputee with the sensation that the missing limb is being touched. This makes it easier to communicate with the brain (i.e. no risk of misaligning an electrode with a nerve), and also eliminates the scar-tissue problem, but it doesn’t eliminate the need for surgery.

- Tapping into the spinal cord using a simple outpatient procedure and sending sensory feedback through this mechanism via wireless transmission. This is a lot less expensive and requires a much lower-level skill set, but the idea is the result of an accidental observation and has not yet been fully explored.

Other Surgical Requirements for Upper-Limb Bionics

Unfortunately, there are other surgical requirements on the road to true mind control over bionic limbs. As you have seen to this point, myoelectric control systems are heavily dependent on muscle movement that is sufficient to generate clear signals.

However, in some cases, amputation removes or otherwise impairs the muscles needed to generate these signals.

There is a surgical solution called Targeted Muscle Reinnervation (TMR) that addresses this situation. In this procedure, surgeons take motor nerves that would normally control lost muscles and reassign them to new muscles. The best example of this involves high upper-arm amputations, where the patient has very little remaining arm musculature. In this case, the motor nerves can be reassigned to chest muscles (i.e. pectoral). The myoelectric sensors are then placed on the chest, and when the patient attempts to move his missing limb, the chest muscles generate the requisite signals.

So, another solution but unfortunately it involves another surgery.

So Where Are We for True Mind Control Over Upper-Limb Bionics?

First, we’re going to have to modify our amputation surgical procedures. There is no way around this. At some point in the future, procedures like AMI, TMR, and possibly even TSR will have to be done as part of every upper-limb amputation as a way of prepping the residual limb for eventual bionic limb use.

Will this modified amputation procedure include embedding myoelectric sensors in muscle tissues?

That’s hard to say. If some kind of auto-adjusting socket or sensor liner is developed (and both are being researched) in concert with greatly improved skin sensors and pattern recognition software, perhaps embedded sensors may not be required. The same is true if Unlimited Tomorrow’s TrueSense evolves to the point where it can control individual finger movements.

But what is clear is that we are still years away from achieving true mind control over bionic upper limbs on any kind of mass scale, especially complete control over bionic hands.

Lower-Limb Bionic Technologies

A Completely Different Paradigm

While the driving design goal of most bionic arm & hand designers has been to enable the brain to control the device, the exact opposite is true with lower-limb bionics. They have essentially outsourced control to local microprocessors that are completely independent of the user’s mind, as shown in this slightly older video on Ottobock’s C-Leg:

One of the limitations of this approach is that it is entirely reactionary. For example, when approaching a slope, there is no way for the user to tell the device about the pending change in terrain. Instead, the user has to take the first step up or down that slope before the device’s sensors and microprocessors adapt to the change.

This lack of anticipation and user control is problematic because a) it puts users at risk of a bad first step, and b) it can make the user’s gait less natural, especially on varied terrain.

To address these issues, scientists have begun to incorporate some of the same myoelectric control techniques that are used for upper limb bionics.

Note, however, that there are significant differences in the implementations between upper and lower limbs, which we’ll describe in the following sections.

Skin-Surface Sensors vs Surgically Implanted Sensors

It does not appear as if the current skin-surface sensors are practical for lower-limb bionics, mainly because the problems with sensor shift are worse with lower limbs (i.e. bigger changes in stump size, more socket movement, etc.).

Our best proof of this is that we’ve yet to see any projects offering lower-limb myoelectric control without implanted sensors, though self-adjusting sockets or sensor liners may change this.

In the meantime, surgically implanted sensors seem to be the only option, which is a huge obstacle for existing amputees who want to assert more control over their lower-limb devices. Of course, injectable sensors could help overcome this obstacle.

Myoelectric Direct Control vs Pattern Recognition

Myoelectric direct control for lower-limb bionics is a non-starter. While it may be workable (albeit awkward) for a bionic-hand user to double-clench a specific muscle to select a grip pattern, there is no equivalent requirement for lower-limb bionics. Lower-limb devices do operate in different modes, but the existing methods of using buttons, mobile apps, or specific physical actions to switch modes already work sufficiently without introducing the uncertainty of myoelectric sensors.

It is far more likely that pattern recognition systems will be used, albeit with less sophistication than is required to control a bionic hand. This should not be an obstacle to improved mind control over lower-limb bionics, as this type of PR software already exists.

Proprioception

Proprioception is every bit as important for lower-limb bionics as it is for upper-limb devices, perhaps even more so. We say this because it is far more common to use one’s legs/feet without looking at them than it is to use one’s hands.

In any case, the solution here is the same: AMI. That’s a big obstacle to delivering mind-controlled lower-limb bionics to the masses, but there doesn’t appear to be any other way to tackle this.

Sensory Feedback for Lower-Limb Bionics

There is a good argument for incorporating sensory feedback into lower-limb bionics, which is made quite succinctly in this video:

Unfortunately, as already discussed, surgically implanting electrodes to directly stimulate sensory nerves is a big undertaking, and certainly not reproducible on a mass scale.

There are also questions of need and the return on investment. For example, many amputees already get some sensory feedback from the vibrations passed from their prosthesis into their residual limb. Those who have an osseointegrated implant get even more of this type of feedback.

Is that enough? It depends. If we can improve proactive control via myoelectric sensors, especially if we restore proprioception, then it may well be enough. If not, perhaps adding additional vibrators into lower-limb sockets to warn the user of, say, uneven terrain or a slippery surface — perhaps that would be sufficient. Note, TSR might also play a role here, though, again, that requires surgery.

Other Surgical Requirements for Lower-Limb Bionics

If we’re going to rely on myoelectric sensors as a key part of our control system, TMR may be applicable in some cases, especially for amputations near or at the hip. We just don’t know because there hasn’t been enough experimentation in this area.

Whatever the surgical requirements, if we want to make solutions scalable, we will need to alter our standard amputation procedures at some point to ensure that every residual lower limb is put in the optimum state for eventual integration with bionic devices, same as we’ll need to do for upper limbs. This will eventually be as big a part of this journey as any other.

The Potential for a Lower-Limb Hybrid Model

Given how far we’ve come with independent microprocessor control over existing bionic knees and feet/ankles, it is not likely that manufacturers are just going to abandon this model. Having devices capable of automatically adjusting to certain situations (such as sitting down, standing up, or even stumbling) is helpful and reduces the need for total mind control.

If anything, we’ve started to see upper-limb bionics borrow from this paradigm with devices like Adam’s Hand automatically adjusting to the shape of objects (and others using built-in cameras and artificial intelligence to attempt something similar).

So we must be somewhat flexible in our definition of mind-control, and perhaps be willing to relinquish some of that control to microprocessors and software.

So Where Are We for True Mind Control Over Lower-Limb Bionics?

The short answer is: closer than we are with bionic hands. Given how much research and progress has been made toward control over bionic hands, this statement might be viewed as controversial. But the fact is, exerting control over lower-limb bionics is a much smaller task. Add proprioception and the proactive detection of user intent using myoelectric sensors, with perhaps a bit of rudimentary sensory feedback tossed in, and we’re pretty much there.

The same cannot be said for upper-limb bionics, which, like it or not, will always be held to the remarkably high standards of our natural hands.

Related Information

For a comprehensive description of all current upper-limb technologies, devices, and research, see A Complete Guide to Bionic Arms & Hands.

For a comprehensive description of all current lower-limb technologies, devices, and research, see A Complete Guide to Bionic Legs & Feet.