Bionic hands will only reach their full potential if we restore true thought control and sensory feedback, which will likely involve a neural interface.

To help readers fully understand the need for at least a partial neural interface for bionic hands, we’re going to walk you through the evolution of some related technologies.

The Limitations of Traditional Myoelectric Control Systems

Myoelectric arms/hands use sensors to detect muscle movements in the residual limb. These sensors are placed against the skin and require the user to flex specific muscles to control a bionic hand.

These types of control systems have been useful. For example, consider this young lady, who lost both her hands to meningitis when she was only 15 months old. Here she is pouring a glass of water using two direct-control myoelectric Hero Arms from Open Bionics:

This and the many tasks she demonstrates in other videos would be extremely difficult, if not impossible, without this technology.

But here are a couple of short videos demonstrating some myoelectric shortcomings at the Cybathlon event in 2016. In this first video, the user has difficulty grasping a cone-shaped object:

It might appear at first glance that the hand is not capable of picking up a cone but this is not the case. The hand used here is an i-Limb. It has a feature called “automatic finger stalling”, which causes fingers to stall when they encounter a certain amount of resistance but allows other fingers to continue to close until they also meet resistance. This should allow the user to grasp an irregular-shaped object like a cone but she lacks the necessary control to do so.

In this next example, the user has difficulty picking up a clothespin:

Part of the problem here is his attempt to grasp the clothespin so precisely. We don’t do this with our natural hands. As long as we can grab an object in the right general area, we can use our sense of touch combined with multiple small finger adjustments to achieve the perfect grip.

Finally, to convey that these problems are not isolated, check out this video from a study conducted by Yale University in April 2021. Researchers attached POV cameras to 8 amputees using 13 different hand prostheses, including body-powered devices, electric terminal devices, and multi-articulating bionic hands. Here is a small example of the resulting footage:

Afterward, they analyzed 16 hours of this footage to assess how often the prostheses were being used and in what manner. Here are some of the study’s key observations:

- Prostheses were used on average for only 19% of all recorded manipulations, which is about 1/4 the rate that natural hands are used.

- Transradial amputees with body-powered devices used their devices for 28% of all manipulations versus only 19% for transradial users with myoelectric devices. Yes, you read that correctly. Body-powered devices were used more frequently than bionic devices.

- 79% of body-powered device usage did not involve grasping versus 60% of bionic hands. This is the one bright spot for bionic hands. When they were used, they were used more frequently for grasping than their body-powered counterparts.

- Multi-articulating bionic hands did NOT result in increased prosthesis use for transradial amputees compared to electric terminal devices such as powered hooks.

These shortcomings are the direct result of inadequate control systems. In the case of myoelectric devices, part of the problem is that the quality of contact between the myoelectric sensors and the skin can vary considerably due to arm position, changes in arm size, and environmental factors like heat and sweat. Another problem is that the control methods are neither intuitive nor precise enough for individual finger movements.

Myoelectric Pattern Recognition

In an attempt to solve the shortcomings of traditional myoelectric control, companies began turning to pattern recognition systems. In these systems, a user simply thinks about moving his hand in a certain way. This triggers a pattern of muscle movements that is detected by a network of sensors and then translated into a command or sequence of commands for the bionic hand. It doesn’t even matter which pattern is triggered as long as it’s repeatable.

The following video provides a quick overview of how Ottobock’s Myo Plus pattern recognition system works, but the same principles apply to all myoelectric pattern recognition systems:

Unfortunately, even good pattern recognition systems can still be defeated by the same sensor problems described previously. They also still lack the precision required for more subtle movements.

One solution to both these problems is to surgically embed the sensors in the patient’s residual arm muscles. This not only eliminates the sensor contact problem but also allows for more sophisticated signal detection, including readings from more muscles at greater depths, which may eventually restore intuitive control over individual fingers. You can read more about this in our article on Implantable Myoelectric Sensors.

Magnetomicrometry (MM) Systems

A new possibility is to completely replace myoelectric systems with magnetomicrometry (MM) technology. In this approach, a pair of small magnetic beads is inserted into each muscle that is to be used for control. Using magnetic fields, sensors continuously measure the distance between the two beads to determine the length and speed of the muscle’s movements. These movements are in turn used to control the movements of the bionic device. The big improvements here are that MM sensors are incredibly precise and they don’t rely on contact with the skin to work.

Below is a short video on this approach. It features a lower-limb device but this technique is equally applicable to bionic hands.

However, whether we perfect MM or myoelectric technologies, we’re still missing one other important aspect of control.

Restoring a Sense of Self

Almost all medical problems are more complex than they appear. When we talk about user control over bionic hands, most of us interpret this as getting the hand to obey the user’s intent. But is that intent well-informed? For example, to grasp an object, you need to know both the current position of your hand as well as the desired position.

We track the positions of our natural hands and in fact all our joints using proprioception. More specifically, our brain knows the precise location of our limbs based on the state of the muscle pairs that control them. For example, to curl your arm toward your chest, you contract your bicep, which stretches your triceps. Your brain intuitively uses these muscle states to calculate the position of your arm. That’s why you can close your eyes and still know the exact location of all your body parts!

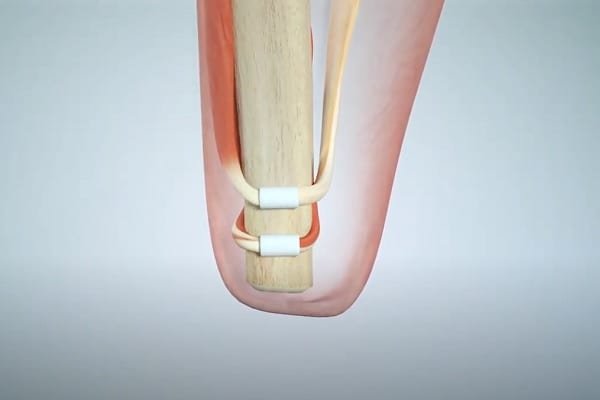

A recent procedure called the Agonist-antagonist Myoneural Interface (AMI) restores this capability for amputees (or, more typically, ensures it is never lost at the time of amputation). In this procedure, surgeons recreate muscle pairs that would otherwise be severed:

Myoelectric or MM sensors are then used to detect the movement of the muscles. Calibrate a bionic device to mirror these movements and, voila, the brain can effectively track the position of the bionic hand! Note that both AMI and MM were developed by the same research group at MIT.

Some combination of the above technologies should eventually provide users with excellent control over their bionic hands. But there is still a need for sensory feedback and that requires a neural interface.

The History of Neural Interfaces

Neural interfaces have been around since 1924. Their introduction began with the cochlear implant and the heart pacemaker in the 1950s, though it is doubtful that anyone viewed the interfaces used by these devices as separate from the devices themselves.

The modern concept of a neural interface began to emerge in the 1970s with research on brain-computer interfaces (BCI).

The expansion of this concept as a means to control bionic limbs is a much more recent development, only achieving critical mass with funding from programs like Hand Proprioception and Touch Interfaces (HAPTIX), launched in 2015 by the U.S. Government’s Defense Advanced Research Projects Agency (DARPA).

Within a few short years, universities working with DARPA were already creating impressive prototypes, as shown in this lighthearted video by the University of Utah:

How Neural Interfaces are Used in Bionic Hands

We would explain this to you in writing but there happens to be a PBS video that does a great job of this already. It involves an early prototype built by Case Western Reserve University — another of DARPA’s university partners:

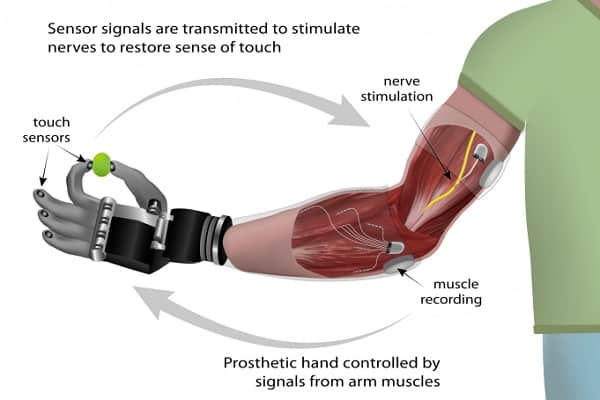

As the video shows, sensors on the bionic hand transmit signals to electrodes that have been surgically embedded in the user’s arm. The electrodes stimulate the nerves that they are attached to, which then transmit the requisite information to the brain.

That’s how the sensory feedback portion of the system works. The methods used to exert control over the bionic hand vary.

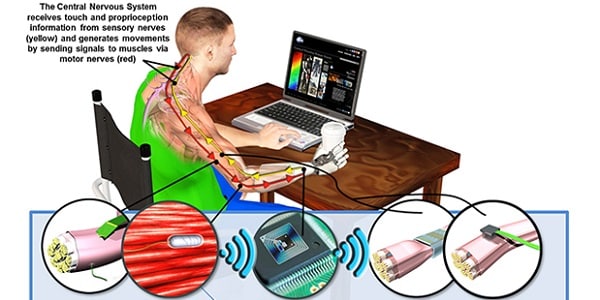

Some earlier approaches attempted to use neural interfaces for control as well as sensory feedback:

In this model, electrodes interacted directly with both motor nerves and sensory nerves. In theory, this direct interaction with motor nerves should provide superior control. However, complications with cross-talk, increased costs, and also an increased risk of scarring required a different solution.

The current preferred solution is to use myoelectric sensors for the control functions and a neural interface for sensory feedback, as described in the preceding video and shown in this diagram:

The primary advantage of this hybrid model over a pure myoelectric system is that the user’s attempted actions are better informed by the sensory feedback.

If MM fulfills its promise, it may eventually replace the myoelectric components in this model.

For now, let’s take a closer look at the neural interface portion of the model.

The Current State of Neural Interfaces

The preceding “Science of Touch” video is seven years old. If you want to truly understand how far we’ve come with neural interfaces, watch the opening segment of this more recent video from 1:27 to 10:49. As you do so, ignore some of the technical terms and focus instead on the general nature of Dr. Tyler’s explanation:

Some of the central points of this segment are:

- The nervous system remains highly organized all the way from the peripheral nerves to the brain or at least high in the spinal cord, meaning that even in cases such as an amputation near the shoulder, the system is still organized enough to be able to differentiate finger sensations.

- We are slowly learning how to communicate with this system in increasingly sophisticated ways.

Now watch the segment from 11:31 to 27:07. We know that this is a much longer video segment than we typically show, but we encourage you to watch it nonetheless. Not only will it give you a great understanding of the current status of neural interfaces for bionic hands. It will also fill you with hope and optimism for the future.

Speaking of hope and optimism for the future, check out these last two videos, both relatively short. The first describes some of the work going on at the Cleveland Clinic, which we present here to give you a sense of how active this area of research has become:

Finally, this last video is perhaps the most hopeful of all because it shows you how we might fulfill one of the most important goals of end-users, as described by Dr. Tyler, which is the ability to hold another person’s hand and feel the warmth of human touch:

Remaining Challenges

Challenge # 1 — Eliminate Invasive Surgery

Surgery is surgery, with all its incumbent risk of scarring and infection. It is also expensive.

There is talk that the small magnetic beads used in MM solutions will be injectable, so that would eliminate the need for invasive surgery for the control side of the equation.

In looking at the preceding videos, it is difficult to imagine implementing a neural interface without surgery. However, researchers at the University of Pittsburgh recently discovered that existing spinal cord stimulators can be used to produce a sense of touch in missing limbs. With 50,000 people already receiving implants of these stimulators every year in the U.S. alone, that means there are doctors all over the world who are already trained in this procedure. Even more important, it is a simple outpatient procedure, thereby avoiding the potential complications of more invasive surgery.

There is still a fair bit of work to be done before this can be used as a neural interface for a bionic hand but here is what one female patient said about her experience with the new technique:

Challenge # 2 — Improve Signal Processing and Interpretation

Signals sent from bionic sensors to electrodes must be processed and interpreted to determine the correct stimulation of the attached nerves. Similarly, commands sent from the brain to the nerves must be processed and interpreted to determine the signals that need to be sent to the bionic hand’s control system.

All of this is not only processing-intensive; it also requires sophisticated artificial intelligence.

Currently, the AI routines are still too specific. That is, they require too much task-specific training.

For example, a baseball and an orange are quite similar. The baseball has seams, whereas the orange is heavier with pitted skin. But both objects are of a similar size and shape.

When neural interface AI routines have been trained to handle a baseball, they should know roughly how to handle an orange without having to be retrained all over again.

Right now, that is not the case with many similar tasks, which imposes too high a training cost on all involved.

Another problem is that the stimulation of nerves can sometimes be imprecise and the brain seems incapable of adjusting its sensory map to compensate. This has implications for both user control and sensory feedback.

Challenge # 3 — Reduce Costs

In what is a near-universal complaint about all bionic technologies, we need to get the costs down to make the technologies more accessible.

There is no easy solution to this problem. But if we need a little hope on this goal, look no further than the cost of traditional myoelectric systems. A few years ago, even the most basic bionic hand costs many tens of thousands of dollars. Now there are 3D-printed bionic hands available for a fraction of that price.

We can do this. We just need to keep pushing!

Related Information

For information on sensory feedback, please see Sensory Feedback for Bionic Hands, Sensory Feedback for Bionic Feet, and Understanding Bionic Touch.

Click this link for more information on bionic hand control systems.

For a comprehensive description of all current upper-limb technologies, devices, and research, see our complete guide.